Digital in-memory compute chip startup d-Matrix has a new 3D stacking memory technology (3DIMC) promising to run AI models 10x faster and slash energy use by up to 90 percent compared to the current industry standard, HBM4.

The firm was founded in 2019 by CEO Sid Sheth and CTO Sudeep Bhoja, both executives at high-speed interconnect developer Inphi Corp, which was acquired by Marvell in 2020 for $10 billion. Its intent is to develop in-memory compute chip-level technology that brings more memory to AI inference than traditional DRAM bandwidth at much less expense than high-bandwidth memory (HBM).

Sheth posted on LinkedIn: “We believe the future of AI inference depends on rethinking not just compute, but memory itself. We are paving the way for a new memory-compute paradigm (3DIMC) that allows our DIMC platform to continue scaling and punch through the memory wall without sacrificing memory capacity and bandwidth. By stacking memory in three dimensions and bringing it into tighter integration with compute, we dramatically reduce latency, improve bandwidth, and unlock new efficiency gains.”

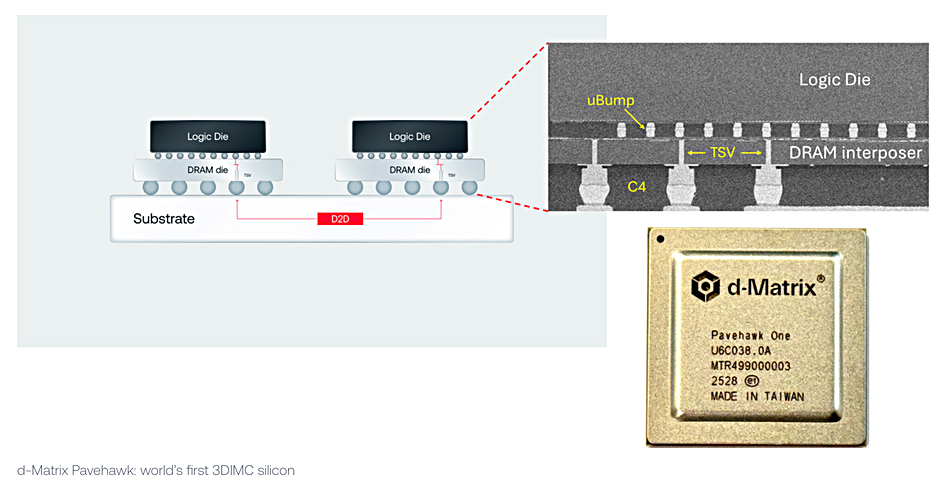

The d-Matrix technology uses LPDDR5 memory with digital in-memory-compute (DIMC) hardware attached to the memory via an interposer. The DIMC engine uses modified SRAM cells, augmented with transistors to perform multiplication, to perform computations within the memory array itself. It’s built with a chiplet architecture and is optimized for matrix-vector multiplication, an operation used in transformer-based models. Apollo compute cores include eight DIMC units that execute 64×64 matrix multiplications in parallel, supporting various numerical formats (e.g. INT8, INT4, and block floating point).

Bhoja wrote in a blog post: “We’re bringing a state-of-the-art implementation of 3D stacked digital in-memory compute, 3DIMC, to our roadmap. Our first 3DIMC-enabled silicon, d-Matrix Pavehawk, which has been 2+ years in the making, is now operational in our labs.”

“We expect 3DIMC will increase memory bandwidth and capacity for AI inference workloads by orders of magnitude and ensure that as new models and applications emerge, service providers and enterprises can run them at scale efficiently and affordably.”

“Our next-generation architecture, Raptor, will incorporate 3DIMC into its design – benefiting from what we and our customers learn from testing on Pavehawk. By stacking memory vertically and integrating tightly with compute chiplets, Raptor promises to break through the memory wall and unlock entirely new levels of performance and TCO.”

“We are targeting 10x better memory bandwidth and 10x better energy efficiency when running AI inference workloads with 3DIMC instead of HBM4. These are not incremental gains – they are step-function improvements that redefine what’s possible for inference at scale.”

Bootnote

d-Matrix has had two funding rounds. A 2022 A-round raised $44 million and $110 million came in via a B-round in 2023, making a total of $154 million. It has a partnership with server composability supplier GigaIO.